Highlights

Propose neural dual quaternion blend skinning (NeuDBS) as our deformation model to replace LBS, which can resolve the skin-collapsing artifacts.

Introduce a texture filtering approach for texture rendering that effectively minimizes the impact of noisy colors outside target deformable objects.

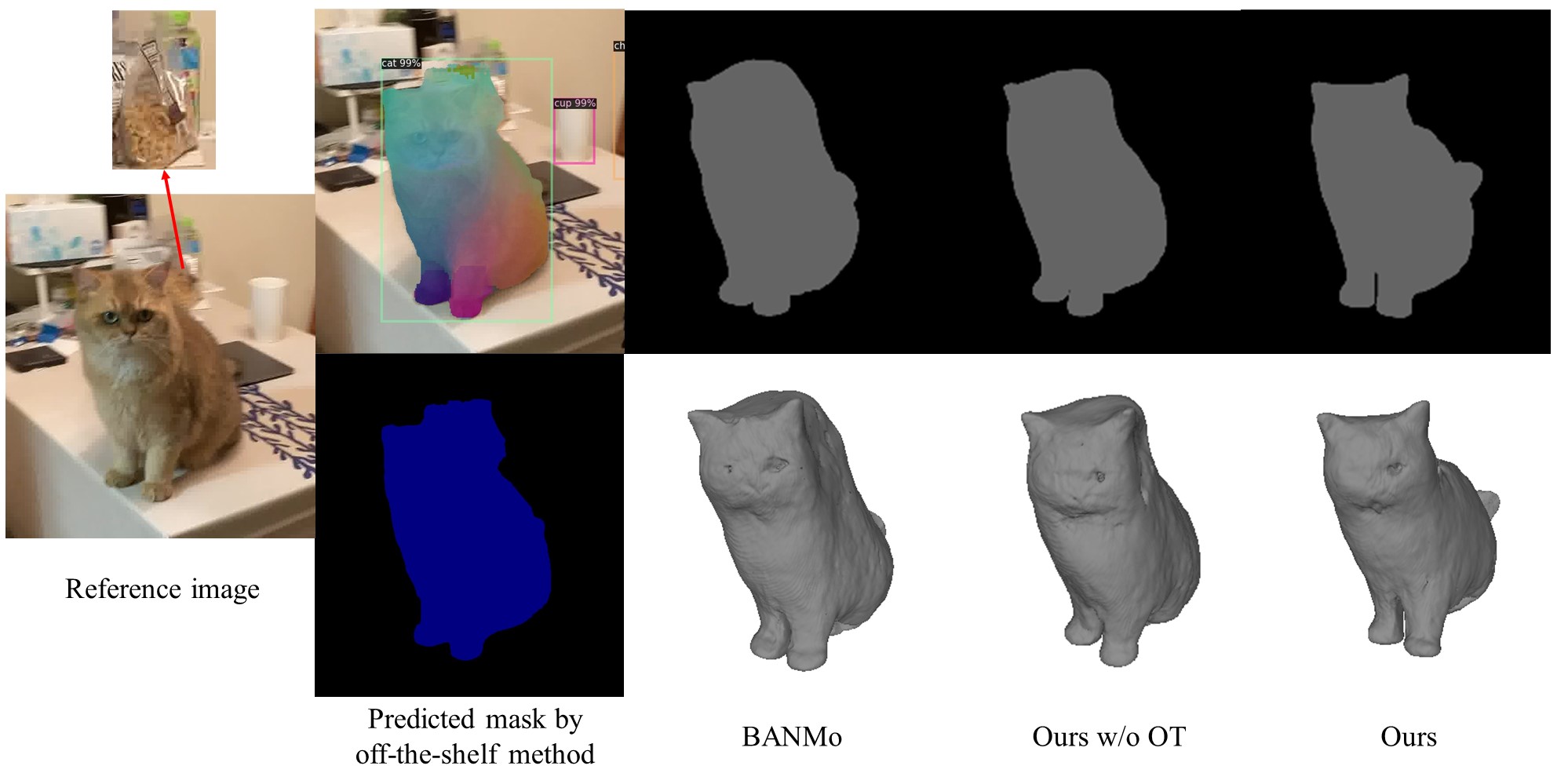

Formulate the 2D-3D matching as an optimal transport problem that helps to refine the bad segmentation obtained from a off-the-shelf method and predict the consistent 3D shape.

Reconstruction

We compare reconstruction results of MoDA and BANMo, the skin-collapsing artifacts of BANMo are marked with red circles.

More reconstruction result can be found at Casual-adult (10 videos), Casual-human (10 videos), Casual-cat (11 videos), AMA (swing and samba of 16 videos).

Optimal transport for 2D-3D matching

By registering 2D pixels across different frames with optimal transport, we can refine the bad segmentation and predict the consistent 3D shape of the cat.

Ablation study on texture filtering

We show the effectiveness of texture filtering appraoch by adding it to both MoDA and BANMo.

Motion re-targeting

We compare the motion re-targeting results of MoDA and BANMo.

Related Links

BibTeX

@article{song2024moda,

title={Moda: Modeling deformable 3d objects from casual videos},

author={Song, Chaoyue and Wei, Jiacheng and Chen, Tianyi and Chen, Yiwen and Foo, Chuan-Sheng and Liu, Fayao and Lin, Guosheng},

journal={International Journal of Computer Vision},

pages={1--20},

year={2024},

publisher={Springer}

}